Research

My research interest focus on building intelligent robot systems that can make decisions under uncertainty in dynamic and human-centered environments. I view this problem as a question of searching suitable task representations for optimal computational reasoning, including designing, planning, control and learning. As a test platform, I am extremely interested in robust grasping and compliant manipulation, since the human hand is an amazing tool demonstrated by its incredible motor capability and remarkable sense of touch.

Grasp Planning

Choosing an optimal grasp for a given object is one of the core problems in robotic grasping. The optimality is constrained by the hand kinematics, task at hand and the object properties (shape, dynamics, friction, etc.). In this research, we study how the hand kinematics, object shape and task requirements influence the grasp planning process. This part includes traditional model-based planning as well as learning-based approaches.

One Shot Grasp Planning

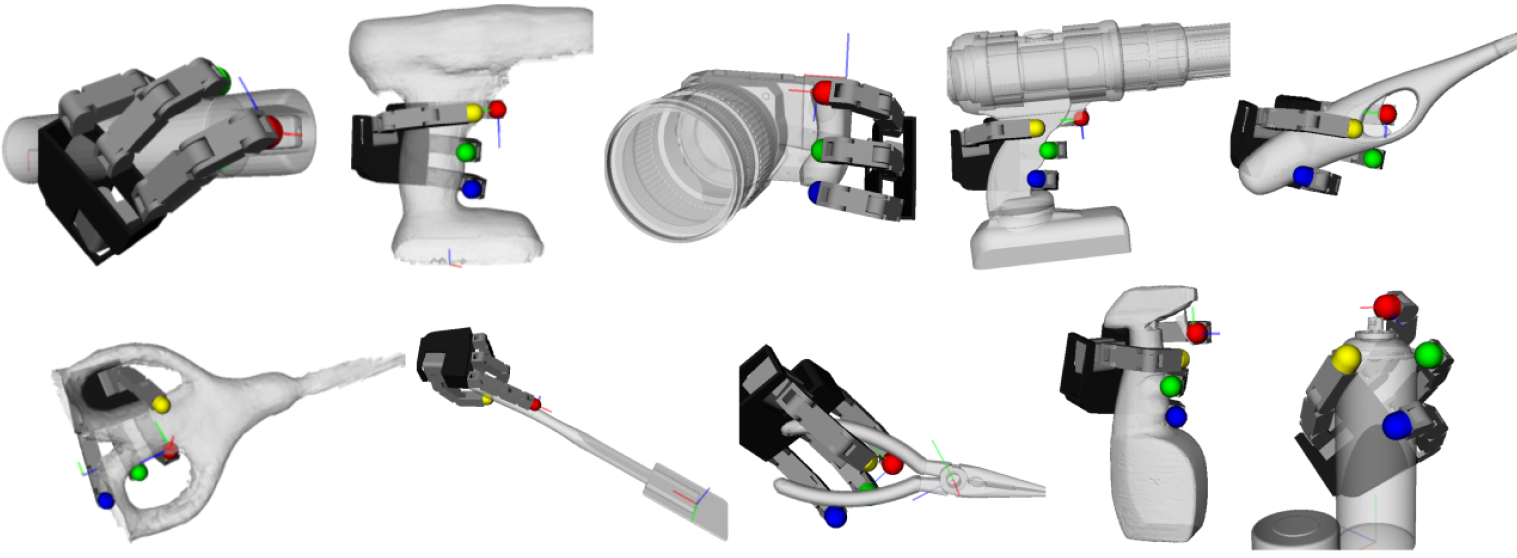

Optimal grasp synthesis has traditionally been solved in two steps: determining optimal grasping points according to a specific quality criterion and then determining how to shape the hand to produce these grasping points. Generating optimal grasps depends on the position of contact points as much as the configuration of the robot hand and it would hence be desirable to solve this in a single step. This research takes advantage of new development in non-linear optimization and formulates the problem of grasp synthesis as a single constrained optimization problem, generating grasps that are at the same time feasible for the hand’s kinematics and optimal according to a force related quality measure.

Dexterous Grasping under Shape Uncertainty

An important challenge in robotics is to achieve robust performance in object grasping and manipulation, dealing with noise and uncertainty. This research presents an approach for addressing the performance of dexterous grasping under shape uncertainty. In our approach, the uncertainty in object shape is parametrized and incorporated as a constraint into grasp planning. The proposed approach is used to plan feasible hand configurations for realizing planned contacts using different robotic hands (Barrett Hand, Allegro Hand).

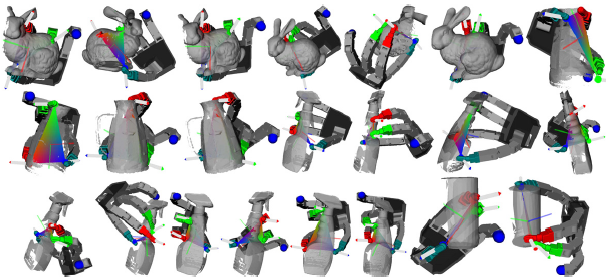

Grasp with Task-specific Contact

Generating robotic grasps for given tasks is a difficult problem. This research proposes a learning-based approach to generate suitable partial power grasp for a set of tool-using tasks. First a number of valid partial power grasps are sampled in simulation and encoded as a probabilistic model, which encapsulates the relations among the task-specific contact, the graspable object feature and the finger joints. With the learned model, suitable grasps can be generated on-line given the task-specific contact. Moreover, a grasp adaptation strategy is proposed to locally adjust the specified contact in order to increase the grasp feasibility and also the quality of the final found grasp. We demonstrate the effectiveness of our approach using a 16 DOF robotic hand – Allegro Hand, on a variety of tool-using tasks.

Grasp Adaptation

For a long time, the robotic grasping community focuses too much on the grasp planning part. However, for real world applications, it is important to know whether the planned grasp is stable or not. In this research, we study how to predict the stability of a given grasp with trained grasping experience from the perspective of the grasped object. With the learned stability estimator, if a grasp is predicted as unstable, we devise an adaptation strategy to stabilize the given grasp. Moreover, to close the loop between initial planning and the adaptation process, we design a closed-loop adaptation strategy to stabilize the grasp in real-time while keep the hand grasp capability in mind.

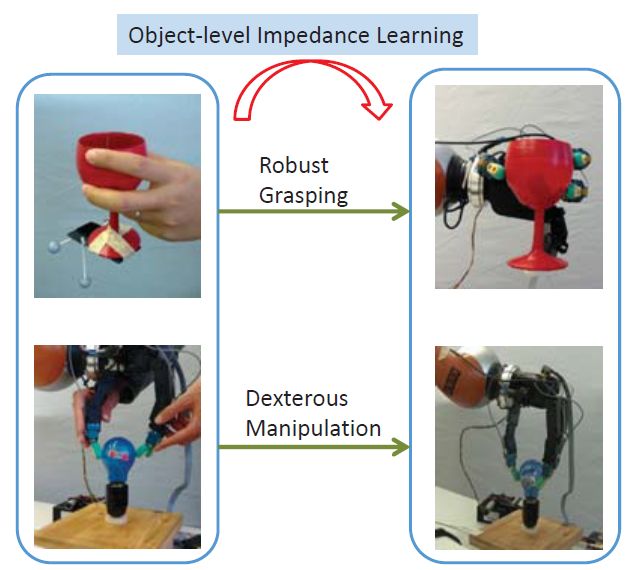

Learning Object-level Impedance Control

Object-level impedance control is of great importance for object-centric tasks, such as robust grasping and dexterous manipulation. Despite the recent progresses on this topic, how to specify the desired object impedance for a given task remains an open issue. In this work, we decompose the object’s impedance into two complementary components– the impedance for stable grasping and impedance for object manipulation. Then, we present a method to learn the desired object’s manipulation impedance (stiffness) using data obtained from human demonstration. The approach is validated in two tasks, for robust grasping of a wine glass and for inserting a bulb, using the 16 degrees of freedom Allegro Hand mounted with the SynTouch tactile sensors.

Learning Grasp Stability

To perform robust grasping, a multi-fingered robotic hand should be able to adapt its grasping configuration, i.e., how the object is grasped, to maintain the stability of the grasp. Such a change of grasp configuration is called grasp adaptation and it depends on the controller, the employed sensory feedback and the type of uncertainties inherit to the problem. This paper proposes a grasp adaptation strategy to deal with uncertainties about physical properties of objects, such as the object weight and the friction at the contact points. Based on an object-level impedance controller, a grasp stability estimator is first learned in the object frame. Once a grasp is predicted to be unstable by the stability estimator, a grasp adaptation strategy is triggered according to the similarity between the new grasp and the training examples. Experimental results demonstrate that our method improves the grasping performance on novel objects with different physical properties from those used for training.

Dynamic Grasp Adaptation

We present a unified framework for grasp planning and in-hand grasp adaptation using visual, tactile, and proprioceptive feedback. The main objective of the proposed framework is to enable fingertip grasping by addressing problems of changed weight of the object, slippage, and external disturbances. For this purpose we introduce the Hierarchical Fingertip Space as a representation enabling optimization for both efficient grasp synthesis and online finger gaiting. Grasp synthesis is followed by a grasp adaptation step that consists of both grasp force adaptation through impedance control and regrasping/finger gaiting when the former is not sufficient. Experimental evaluation is conducted on an Allegro hand mounted on a Kuka LWR arm.

Compliant Manipulation

Object manipulation is a challenging task for robotics, as the physics involved in object interaction is complex and hard to express analytically. Extended from the robotic grasping research, in this research we study the underlying interaction model between the object and the environment. To enable robust physical interaction with the environment, different models have been investigated to represent the task at hand, including modular approach, manifold learning, etc. To facilitate the model learning process, we adopt the learning from demonstration framework where the data collected from demonstration are used from model training.

Learning Manipulation Strategies from Human Demonstration

Here we introduce a modular approach for learning a manipulation strategy from human demonstration. Firstly we record a human performing a task that requires an adaptive control strategy in different conditions, i.e. different task contexts.We then perform modular decomposition of the control strategy, using phases of the recorded actions to guide segmentation. Each module represents a part of the strategy, encoded as a pair of forward and inverse models. All modules contribute to the final control policy; their recommendations are integrated via a system of weighting based on their own estimated error in the current task context.We validate our approach by demonstrating it, both in a simulation for clarity, and on a real robot platform to demonstrate robustness and capacity to generalise. The robot task is opening bottle caps. We show that our approach can modularize an adaptive control strategy and generate appropriate motor commands for the robot to accomplish the complete task, even for novel bottles.

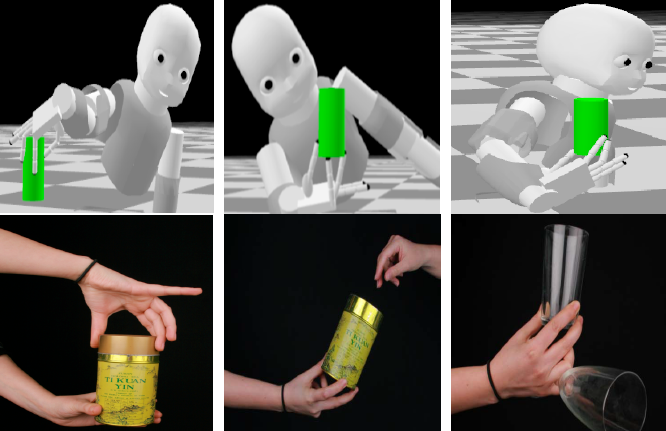

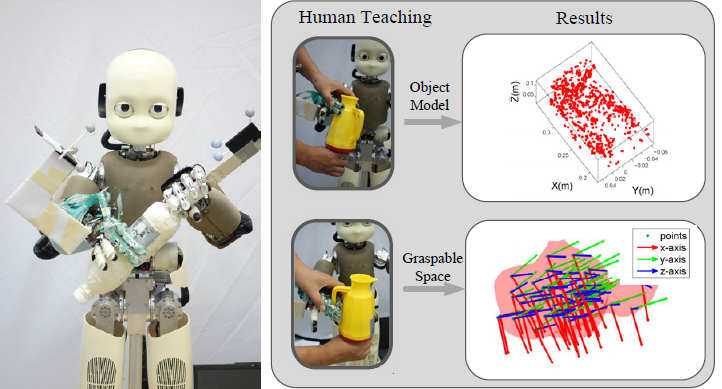

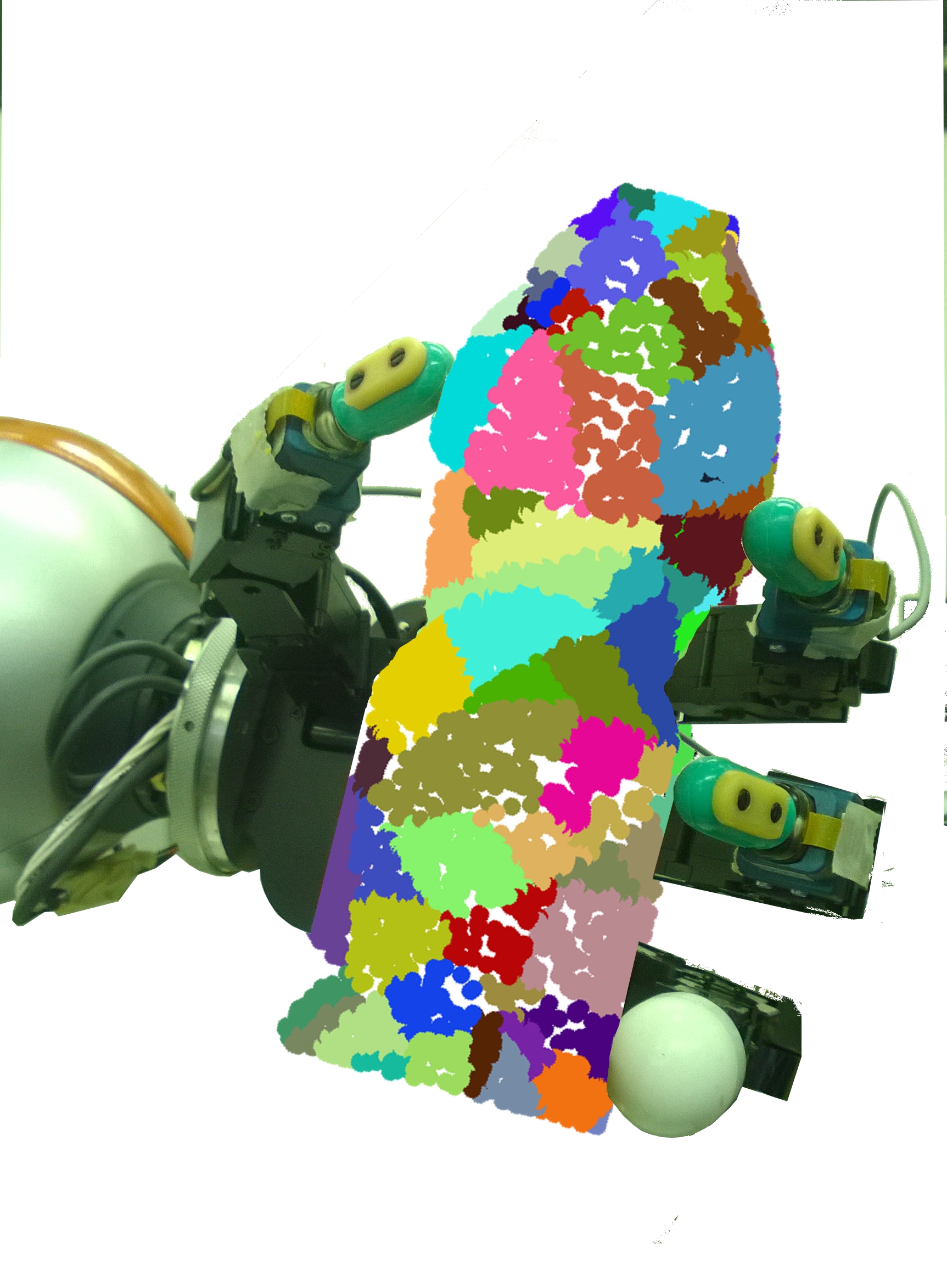

Bimanual Compliant Tactile Exploration

Humans have an incredible capacity to learn properties of objects by pure tactile exploration with their two hands. With robots moving into human-centred environment, tactile exploration becomes more and more important as vision may be occluded easily by obstacles or fail because of different illumination conditions. In this paper, we present our first results on bimanual compliant tactile exploration, with the goal to identify objects and grasp them. An exploration strategy is proposed to guide the motion of the two arms and fingers along the object. From this tactile exploration, a point cloud is obtained for each object. As the point cloud is intrinsically noisy and un-uniformly distributed, a filter based on Gaussian Processes is proposed to smooth the data. This data is used at runtime for object identification. Experiments on an iCub humanoid robot have been conducted to validate our approach.

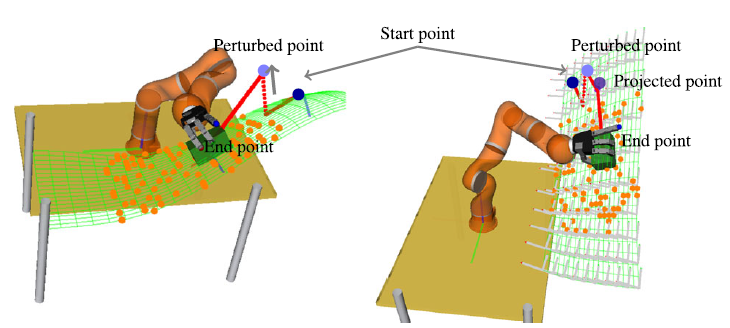

Learning Task Manifold

Reliable physical interaction is essential for many important challenges in robotic manipulation. In this work, we consider Constrained Object Manipulations tasks (COM), i.e. tasks for which constraints are imposed on the grasped object rather than on the robot’s configuration. To enable robust physical interaction with the environment, this paper presents a manifold learning approach to encode the COM task as a vector field. This representation enables an intuitive task-consistent adaptation based on an object-level impedance controller. Simulations and experimental evaluations demonstrate the effectiveness of our approach for several typical COM tasks, including dexterous manipulation and contour following.

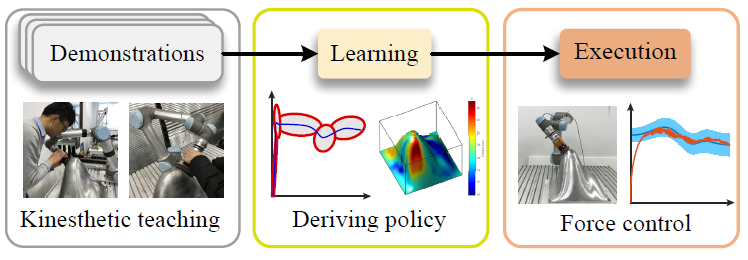

Learning Force-based Skills

Many human manipulation skills are force relevant or even force dominant, such as opening a bottle cap, assembling a furniture, etc. However, it is still a difficult task to endow a robot with these skills, which largely dues to the complexity on the representation and planning of these skills. This research presents a learning-based approach to transferring force-dominant skills from human demonstration to a robot. First, the force-dominant skill is encapsulated as a statistical model where the key parameters are learned from the demonstrated data (motion, force). Second, based on the learned skill model, a task planner is devised that specifies the motion and/or the force profile for a given manipulation task. Finally, the learned skill model is further integrated with an adaptive controller that offers a task-consistent force adaptation during on-line execution. The effectiveness of the proposed approach is validated in two experimental scenarios, namely complex surface polishing and peg-in-hole assembly.

Recent Works

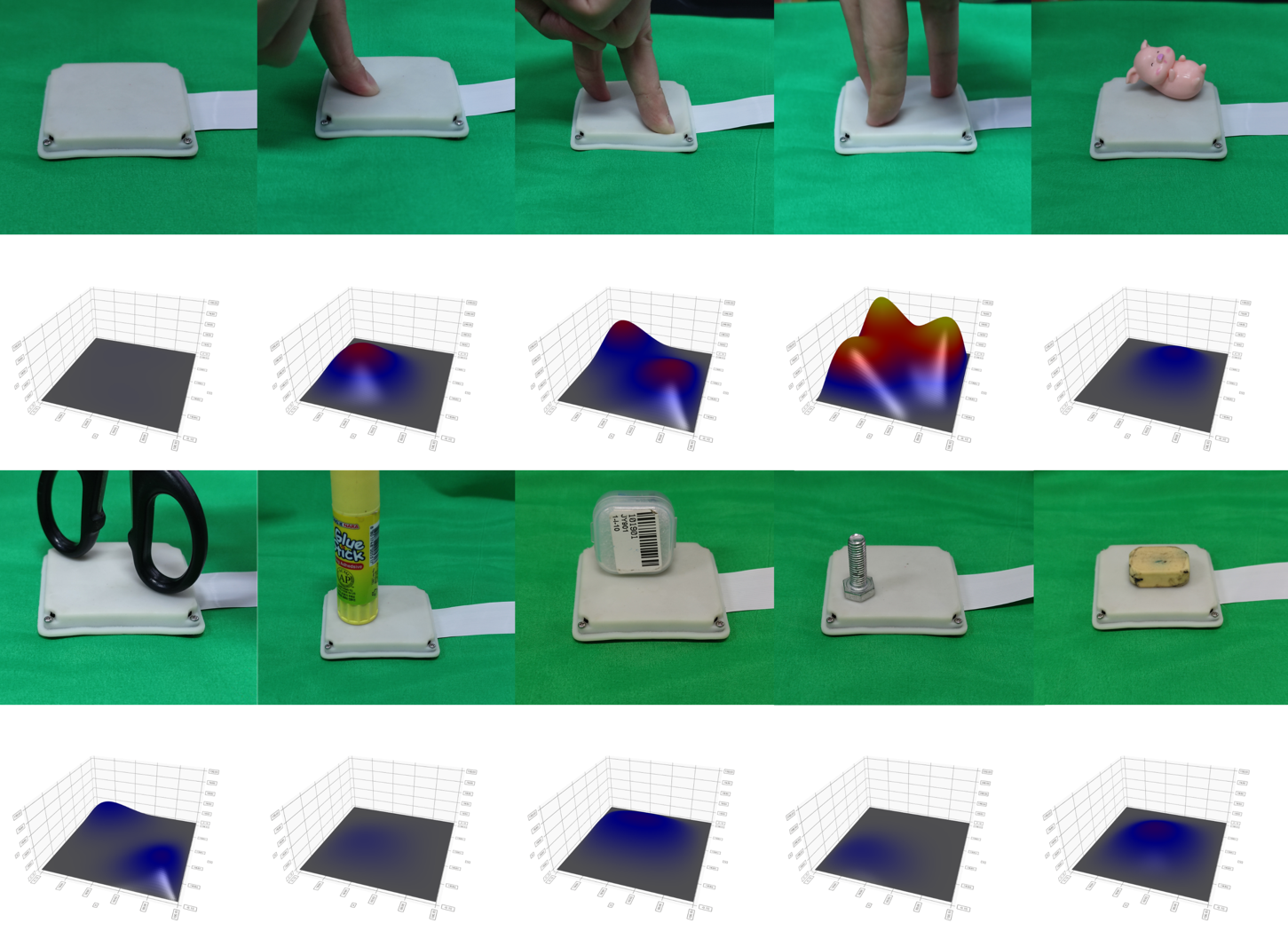

A Biomimetic Tactile Palm for Robotic Object Manipulation

Tactile sensing plays a vital role for human hand function in terms of object exploration, grasping and manipulation. Different types of tactile sensors have been designed during the past decades, which are mainly focused on either the fingertips for grasping or the upper-body for human-robot interaction. In this paper, a novel soft tactile sensor has been designed to mimic the functionality of human palm that can estimate the contact state of different objects. The tactile palm mainly consists of three parts including an electrode array, a soft cover skin and the liquid resistance between them. The design principle and the fabrication process are described in details, with a number of experiments showcasing the effectiveness of the proposed design.

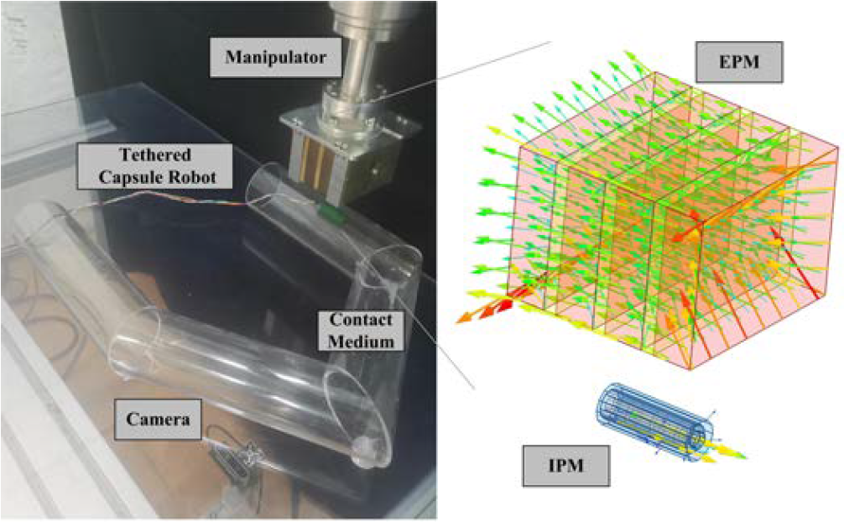

Learning Friction Model for Magnet-actuated Tethered Capsule Robot

The potential diagnostic applications of magnetactuated capsules have been greatly increased in recent years. For most of these potential applications, accurate position control of the capsule have been highly demanding. However, the friction between the robot and the environment as well as the drag force from the tether play a significant role during the motion control of the capsule. Moreover, these forces especially the friction force are typically hard to model beforehand. In this paper, we first designed a magnet-actuated tethered capsule robot, where the driving magnet is mounted on the end of a robotic arm. Then, we proposed a learning-based approach to model the friction force between the capsule and the environment, with the goal of increasing the control accuracy of the whole system. Finally, several real robot experiments are demonstrated to showcase the effectiveness of our proposed approach.

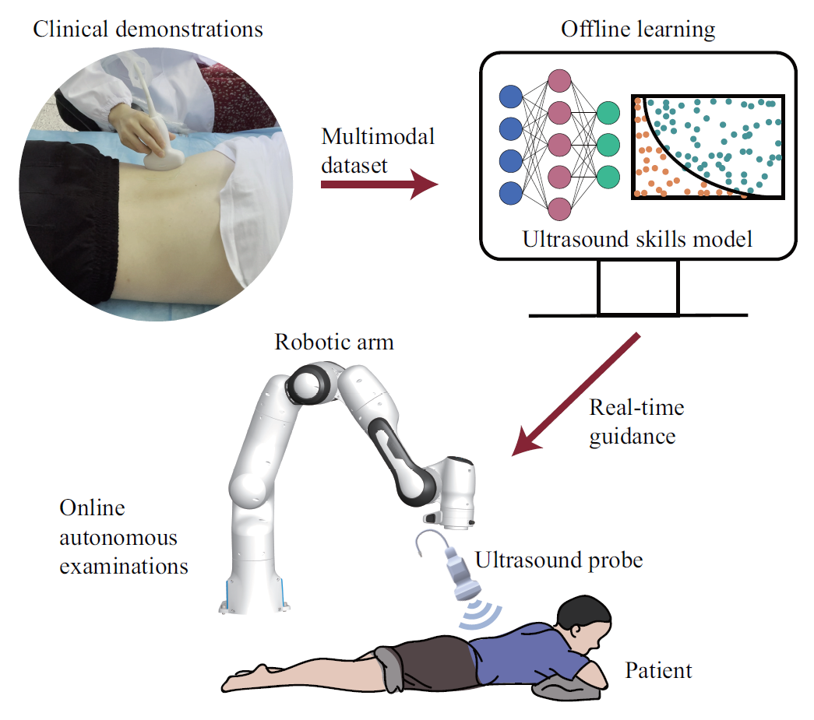

Learning Autonomous Ultrasound through Latent Representation and Skills Adaptation

As medical ultrasound becoming a demanding examination approach nowadays, robotic ultrasound systems can facilitate the scanning process and prevent professional sonographers from repetitive and tedious work. Despite the progress recently, it is still a challenging task to enable robots to autonomously accomplish the ultrasound examination, which largely dues to the lack of a proper task representation method, and also a skill adaptation approach to generalize the scanning skills across different patients. To solve these problems, we propose the latent task representation and the skills adaptation for autonomous ultrasound in this paper. During the offline learning stage, the multimodal ultrasound skills are merged and embedded into low-dimensional space by a fully self-supervised framework, with taking clinically demonstrated ultrasound images, probe orientation, and contact force into account. These demonstrations in latent space will be encapsulated into a probability model. During the online execution stage, the optimal prediction will be selected and evaluated by the probability model. For any singular prediction with an unstable and uncertain state, the adaptation optimizer will fine-tune the prediction to a close and stable one in the high-confidence region. Experimental results show that the proposed approach can generate ultrasound scanning strategies for diverse populations, with the similarity to the sonographer’s demonstrations and quantitative results significantly better than our previous method.

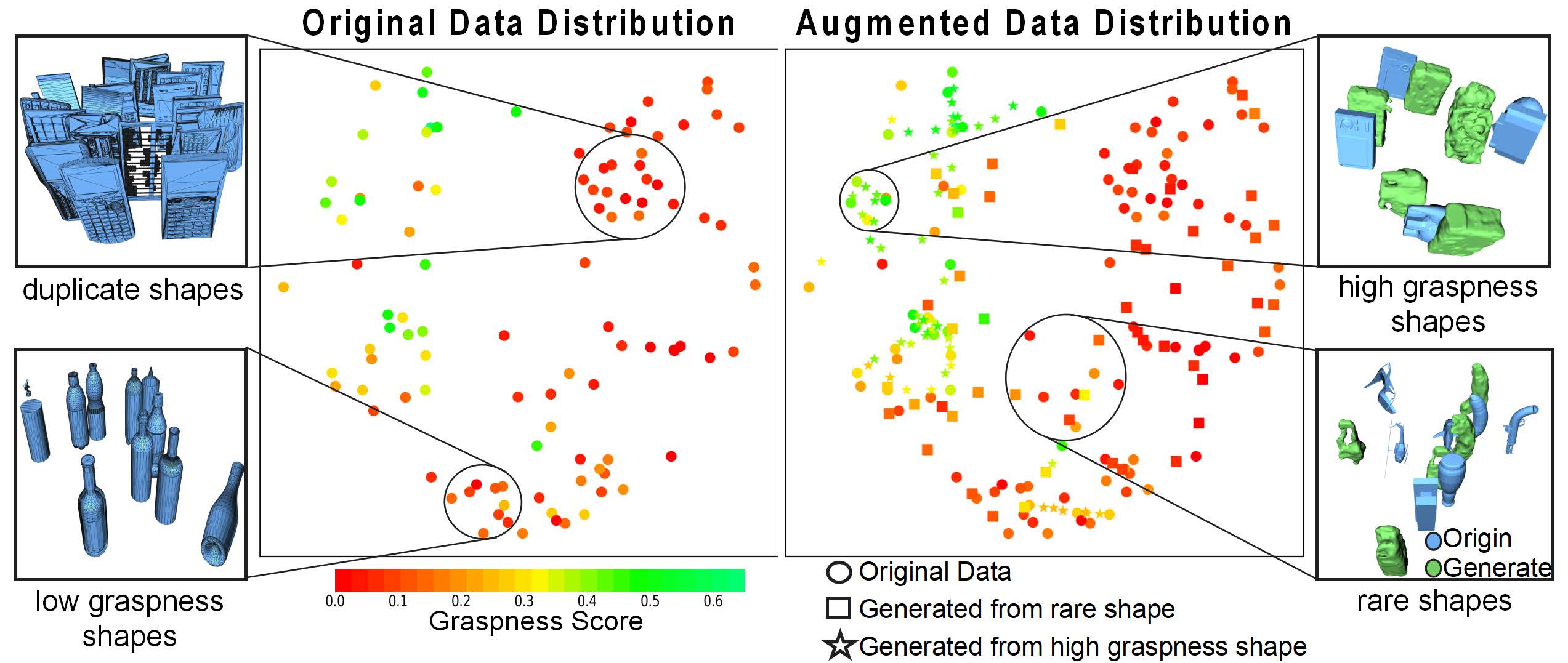

Learning Grasp Ability Enhancement through Deep Shape Generation

Data-driven especially deep learning-based approaches have become a dominant paradigm for robotic grasp planning during the past decade. However, the performance of these methods is greatly influenced by the quality of the training dataset available. In this paper, we propose a framework to generate object shapes to augment the grasping dataset and thus can improve the grasp ability of a pre-designed deep neural network. First, the object shapes are embedded into a low dimen- sional feature space using an encoder-decoder structure network. Then, the rarity and graspness scores are computed for each object shape using outlier detection and grasp quality criteria. Finally, new objects are gen- erated in feature space leveraging the original high rarity and graspness score objects' feature. Experimental results show that the grasp ability of a deep-learning-based grasp planning network can be e ectively improved with the generated object shapes.

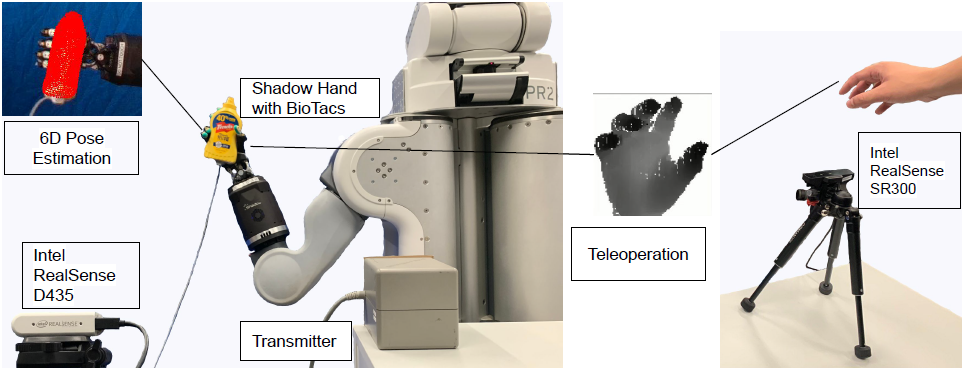

PoseFusion: Robust Object-in-Hand Pose Estimation with SelectLSTM

Accurate estimation of the relative pose between an object and a robot hand is critical for many manipulation tasks. However, most of the existing object-in-hand pose datasets use two-finger grippers and also assume that the object remains fixed in the hand without any relative movements, which is not representative of real-world scenarios. To address this issue, a 6D object-in-hand pose dataset is proposed using a teleoperation method with an anthropomorphic Shadow Dexterous hand. Our dataset comprises RGB-D images, proprioception and tactile data, covering diverse grasping poses, finger contact states, and object occlusions. To overcome the significant hand occlusion and limited tactile sensor contact in real-world scenarios, we propose PoseFusion, a hybrid multimodal fusion approach that integrates the information from visual and tactile perception channels. PoseFusion generates three candidate object poses from three estimators (tactile only, visual only, and visuo-tactile fusion), which are then filtered by a SelectLSTM network to select the optimal pose, avoiding inferior fusion poses resulting from modality collapse. Extensive experiments demonstrate the robustness and advantages of our framework. All data and codes are available on the project website: https://elevenjiang1.github.io/ObjectInHand-Dataset/.

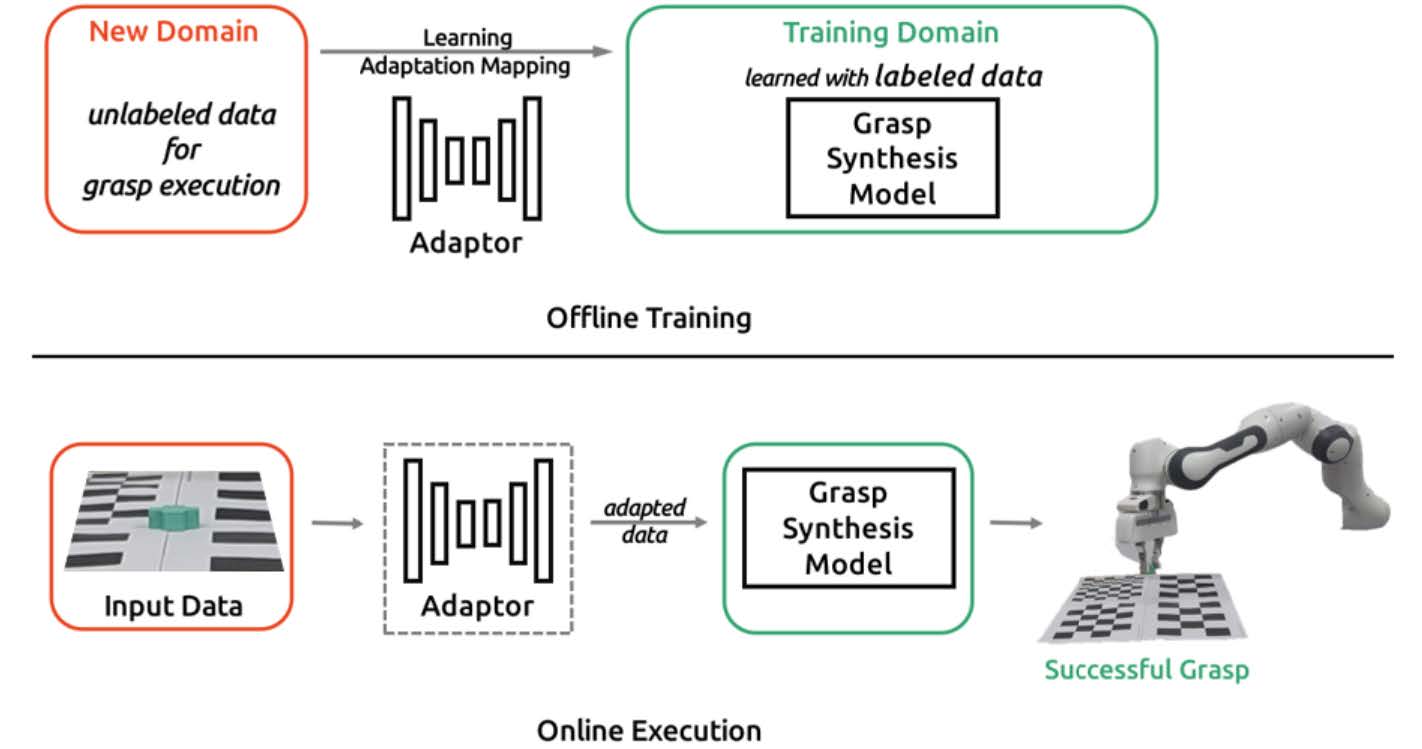

GraspAda: Deep Grasp Adaptation through Domain Transfer

Learning-based methods for robotic grasping have been shown to yield high performance. However, they rely on expensive-to-acquire and well-labeled datasets. In addition, how to generalize the learned grasping ability across different scenarios is still unsolved. In this paper, we present a novel grasp adaptation strategy to transfer the learned grasping ability to new domains based on visual data using a new grasp feature representation. We present a conditional generative model for visual data transformation. By leveraging the deep feature representational capacity from the well-trained grasp synthesis model, our approach utilizes feature-level contrastive representation learning and adopts adversarial learning on output space. This way we bridge the domain gap between the new domain and the training domain while keeping consistency during the adaptation process. Based on transformed input grasp data via the generator, our trained model can generalize to new domains without any fine-tuning. The proposed method is evaluated on benchmark datasets and based on real robot experiments. The results show that our approach leads to high performance in new scenarios.

A Tendon-Driven Soft Hand for Dexterous Manipulation

With the use of flexible material, soft robots have the ability to make a large deformation and interact safely with the environment, which leads to a broad range of applications. However, due to their hyper-redundant nature and inherent nonlinearity in both material properties and geometric deformations, formulating an effective kinematic model for control tasks becomes a challenge. Analytical solutions for forward kinematics (FKs) are limited to specific designs with relatively simple shapes, making the computation of inverse kinematics (IK) for general soft robots with complex structures a difficult problem. Thus, the ability to compute IK solutions rapidly and reliably is crucial for enhancing control precision and response speed in practical tasks involving redundant soft robots. In this project, we firstly developed an anthropomorphic hand with flexible microstructure fingers capable of achieving controllable stiffness deformation, enabling effective and adaptive object grasping. The synergy mechanism was incorporated to replicate coordinated and ordered human hand motions observed during the grasp approach phase. This design enables the hand to grasp objects of various shapes using either a single actuator on the synergy mechanism or independent actuators on each finger for more specialized tasks. Furthermore, the grasping function is enhanced by integrating flexible tactile sensors on the fingertips and palms. Moving forward, our project aims to propose a learning-based approach utilizing the finite element method (FEM) to model real-time IK computation on highly nonlinear soft hand. The ultimate objective is to enhance the control accuracy and response frequency of the entire system.